Probing RNN Encoder-Decoder Generalization of Subregular Functions using Reduplication

Abstract

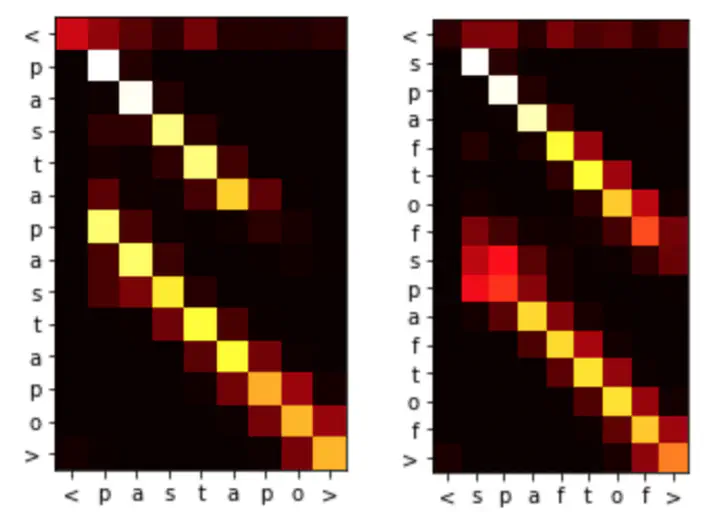

This paper examines the generalization abilities of encoder-decoder networks on a class of subregular functions characteristic of natural language reduplication. We find that, for the simulations we run, attention is a necessary and sufficient mechanism for learning generalizable reduplication. We examine attention alignment to connect RNN computation to a class of 2-way transducers.

Type

Publication

In Proceedings of the Society for Computation in Linguistics